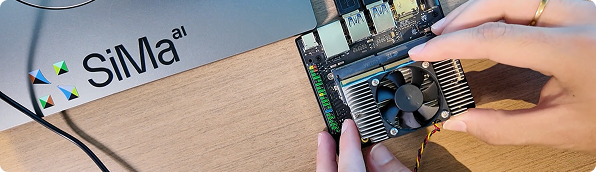

Physical AI: LLMs on Modalix Under 10 Watts

Model Zoo

Explore

Curated LLMs, LMMs & VLMs pre-compiled, one-click import from Hugging Face.

Flexibility

Compile

Select architecture & quantization; LLiMa outputs an edge-ready binary in hours.

Runtime

Accelerate

Run state-of-the-art models locally at <10 W, completely automated.

Deployment

Integrate

- OpenAI Compatible Endpoints

- Model Context Protocol

- Retrieval-augmented generation

- Agent 2 Agent