Unlock the Future of Intelligent Robotics – SiMa Modalix Runs GR00T 1.5!

Robots Need More Than Perception

They need understanding and action.

GR00T 1.5 is the latest open foundation model for humanoid robots, designed to understand language, interpret vision, and take physical actions. GR00T 1.5 combines vision, language, and generative reasoning to interpret natural commands like “wash the dishes.” Compared to GR00T 1 and competing open-source models, GR00T 1.5 is a major leap forward in teaching robots to understand and execute human commands.

Punching Above Our Weight

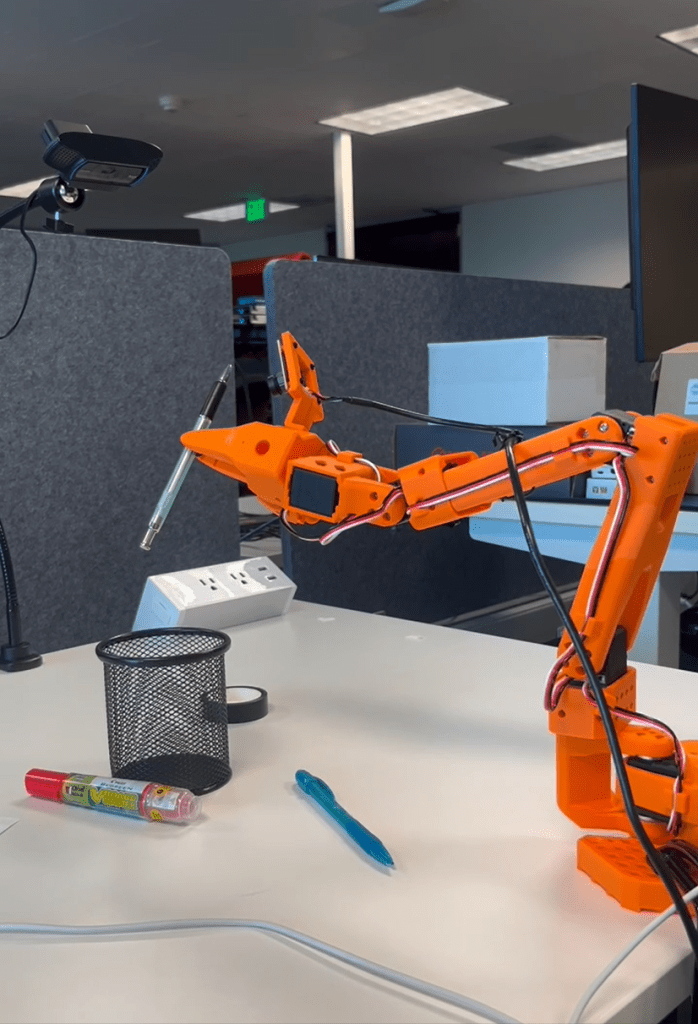

At SiMa.ai, our mission is to bring Physical AI to life by enabling the world’s most advanced AI models to run efficiently on device. Today, we’re excited to share that our team successfully deployed an early demonstration of the full GR00T 1.5 stack—including both the vision-language model and the diffusion transformer—running on the MLSoC™ Modalix System on Module (SoM) with BF16 inference (16-bit floating point), with the prompt “grab the pens and put them in the holder.”

This milestone shows that foundation models designed for humanoid robots—once requiring racks of GPUs—can now be executed on a single low-power embedded device.

The performance of the robot arm (pictured above) was on par with NVIDIA Jetson Orin NX but at a lower power envelope, highlighting SiMa’s architecture’s efficiency advantage. We are continuing to optimize across all vectors of performance, power, and accuracy.

Why This Matters

For robotics customers, this is more than a benchmark. It’s a glimpse of what’s possible:

- Natural interaction: Robots can understand and respond to human instructions without pre-programming.

- Novel object handling: Thanks to GR00T 1.5’s ability to learn from human videos, robots can adapt to new objects on the fly.

- Low-power deployment: Modalix makes this possible on device —no reliance on cloud GPUs, no latency, no privacy trade-offs.

- Scalability: With multiple Modalix devices, workloads like Vision-Language Models (VLM) and diffusion transformers can be distributed for even higher throughput.

With GR00T 1.5 running on Modalix, we can begin to imagine real applications:

- Service robots that clean or organize when given natural instructions.

- Industrial systems that adapt dynamically to new tasks without reprogramming.

- Assistive devices that combine perception, reasoning, and safe physical interaction.

Unlike cloud-based approaches, all of this runs locally, in real time, with low power and no dependency on external bandwidth or servers.

The Path to Truly Intelligent, Autonomous Robotics is Coming into View

SiMa.ai is one of the first companies to run a vision-language-action model like GR00T 1.5 on device. Modalix delivers equivalent or better performance than competitors with higher TOPS ratings. This is just the beginning – stay tuned as we continue to advance the state of Physical AI.

Please visit our Industrial Markets page or contact us for more information.