Elevate Your ML Journey

Elevate Your ML Journey Get Your DevKit*Online purchasing is US-only. To buy outside the US, please fill out the form here.

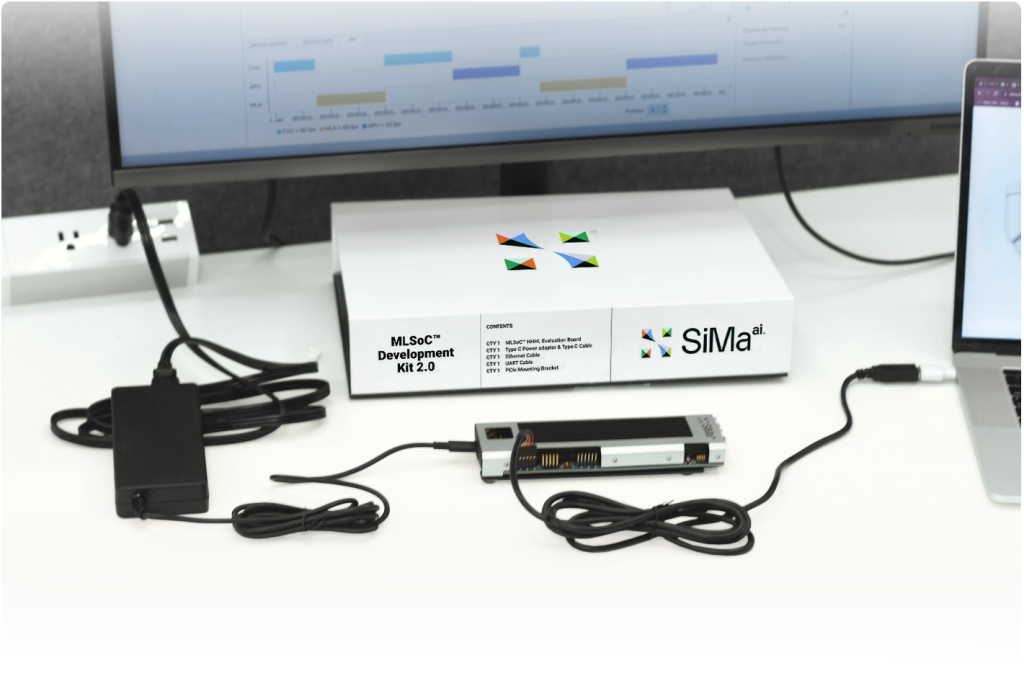

SiMa.ai’s MLSoC Development Kit contains everything you need to evaluate, prototype and demonstrate your computer vision ML applications on the developer board using the contained MLSoC purpose-built silicon from SiMa.ai. The Development Kit combines a compact Developer Board based upon our HHHL PCIe production board that has been modified to expose interfaces utilized by developers and accommodates operation stand-alone on a lab bench environment as well as able to embed in a PCIe platform. This on-bench or in PC configuration options offer flexibility to support different developer profiles.

*Online purchasing is US-only. To buy outside the US, please fill out the form here.

Edge ML Developers Quick Evaluation, Prototyping and Demonstration of edge ML use cases with an all- inclusive kit:

MLSoC Developer Board:

The MLSoC Developer Board is based upon our PCIe half-height, half-length production board but is modified to operate on a lab bench environment with access to is a versatile board that uses the SiMa.ai Machine Learning System on Chip (MLSoC) device.

On-bench

In PC’s

Low power board

Machine learning accelerator (MLA)

Application processing unti (APU)

Video encoder/decoder

Computer vision unit (CVU)

Contained in Development Kit 2:

Developer Board (HHHL)

Type C Power adaptor & Type C to micro USB – cable

Ethernet Cable

UART Cable to USB

PCIe Mounting Bracket

Palette Software License

Seat LicenseOrdering information:

SKU# MLSOC-DEV-16GB-200-ABDevelopment Kit 2 Camera Bundle:

Development Kit 2 with Camera bundle to demonstrate GStreamer ML pipelines in real-time- RouteCAM_CU20 – Sony® IMX462 Full HD GigE Camera

- Power-over-Ethernet camera with IEEE 802.3af compliance

- Comes with PoE power adapter and cable

- Houses Sony® Starvis IMX462 CMOS image sensor

- Ultra-low light sensitivity & Superior near-infrared performance

- High Dynamic Range (HDR)

- On-board high-performance ISP

- Supports 10Base-T, 100Base-TX and 1000base-T-modes

- Developer Kit Bundled with 1GB Ethernet Camera

Ordering information:

SKU# MLSOC-DEV-16GB-200-ABAMLSoC Developer Kit On-site Accelerate

On-Site Accelerate delivers a customized face-to face session with a SiMa.ai technical expert on the Developer Kit Bring-up and training on the Palette software to compile, build and deploy Python and GStreamer based pipelines on the Developer Board Incorporate your whole team to get up to speed quickly and be able to ask questions real-time as you walk through the development process.

Ordering information:

On-Site Accelerate – DevKit bring-up and training:

MLSOC-DBBU-SW-Training-OA

Available Direct and through the following Distributors: