Adapting to the “New Normal”

The embedded edge market is a massive $1T+ market that’s been surviving on old technology for decades. Why don’t we have all of our factories automated with robots or a fully autonomous vehicle to take us around? And, where is our advanced, remote medical care? People have been waiting for these promised technologies for years and though technical advances have been made, these types of products are still not yet widely available.

The angst in not having these types of products for everyday use has been exacerbated by the COVID-19 pandemic, forcing all of us to adapt to a “New Normal”. No one predicted the ripple effect this pandemic would have on society as a whole and on technology in particular. With over 100 million global confirmed cases of the coronavirus resulting in over 2 million deaths, a completely new mindset has emerged on how people interact with the world in every facet of their lives at home, at work, and in society in general.

The pandemic is accelerating large investments in technology and society eagerly awaits these as we all are forced to adapt to this “New Normal”. Companies and individuals alike have a heightened sense of urgency to embrace the innovations needed to transform our world to this new reality and quickly embark to upgrade our infrastructure and embedded edge products to address the necessary changes to make this happen.

How will this happen? Well, a key missing enabler is a purpose-built ML platform that contains the necessary technology to help bridge the capability gap to address this massive market and service today’s needs. For this to happen at scale, the following five elements must come together.

High performance ML at the lowest power possible

To effectively scale embedded edge products beyond just a token number of applications, companies need ML technology that operates at high performance and low power. For example, today’s level 4-5 (L4-L5) autonomous vehicle prototypes consume around 2.5-3KW of power. For L5 autonomous vehicles to become commercially viable, they will need a 30x reduction in power consumption to <100W along with a much smaller physical footprint and a reduced need for active cooling than what is available in today’s prototypes. Not surprisingly, there are no autonomous cars on the road just yet though the need for these energy efficient vehicles has moved from something that is nice to have to an essential necessity.

There’s another critical reason for reducing power consumption in these devices. As these vehicles get deployed in the billions in the future, they need to have the most efficient power profile so we can begin reversing the debilitating atmospheric damage on our planet due to carbon emissions. According to the New York Times1, not only are electric cars far more climate friendly than gas-burning ones, they are often cheaper over their lifetime as well. High performance ML at lowest power possible is a critical enabler to help move autonomous vehicles into large scale production.

Adding ML to legacy edge compute applications using a safe and secure SoC

ML will finally happen at scale in embedded edge applications such as smart vision, robotics, autonomous systems, automotive and aerospace and defense when ML is able to be easily added to entrenched, legacy systems. Fortune 500 companies and startups alike have invested heavily in their current technology platforms. Most of them will not rewrite all their code or completely overhaul their underlying infrastructure to integrate ML. To mitigate risk while reaping the benefits of ML, there needs to be technology that allows for seamless integration of legacy code along with ML into their systems. This will create an easy path to develop and deploy these systems to address the application needs while providing the benefits from the intelligence that machine learning brings.

An example of this is Amazon’s Prime Air2, a future delivery system designed to safely get packages to customers in 30 minutes or less using unmanned aerial vehicles. Amazon is pairing their existing worldwide delivery infrastructure with drones to provide rapid parcel delivery to millions of customers while also increasing the overall safety and efficiency of the transportation system. This cannot be achieved without successful integration of ML into Amazon’s infrastructure.

ML experience with ease of use allows skipping the learning curve

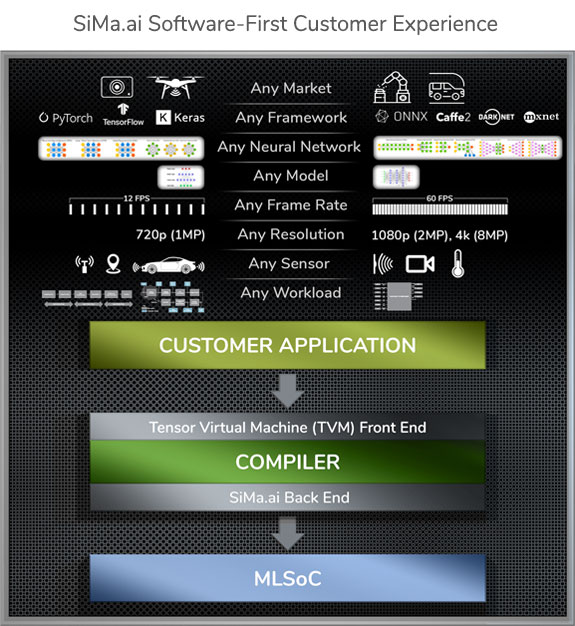

Ease of use is extremely important to make ML accessible to the masses. If it is too difficult to add machine learning to embedded edge applications or takes too long to deploy these products, widespread adoption of ML at the embedded edge will never become a reality. Fortunately, the industry understands this challenge and innovative companies are adopting approaches that allow any model, any neural network, any framework for any workload with any resolution and any frame rate to come in through any sensor and be efficiently compiled by the software and effectively deployed on a purpose-built ML device. For example, SiMa.ai’s Machine Learning SoC (MLSoCTM) platform environment eliminates the traditional time-consuming development associated with trying to customize the software code to compiler-specific requirements and also solves the complexity of using discrete ML hardware accelerators, vision processors and applications processors by offering a single-chip solution for embedded edge inference needs. This approach accelerates development velocity and eliminates the problem of delivering yesterday’s ML technology in products needed to solve today’s needs.

SiMa.ai’s Software-First customer experience enables the widespread adoption of ML at the embedded edge by supporting any model, any neural network, any framework for any workload. Any resolution and any frame rate that comes through any sensor is efficiently compiled by the software and effectively deployed on the purpose-built machine learning device.

Democratization of ML development and deployment

Democratization of ML in development and deployment is needed for ML to take off. Previously, products with advanced technologies such as ML were the domain of just a few that had scale and could afford it. However, this now needs to quickly proliferate more broadly – both in development and also in deployment at scale.

Nowhere is the democratization of ML deployments needed more urgently than in the medical industry where the COVID-19 pandemic is causing havoc. The pandemic has created a surge in demand for medical device companies that serve hospitals to offer secure diagnostics in healthcare through semi-autonomous and fully autonomous equipment. In addition, the same medical device companies are looking to offer products for in-home care that meet the privacy, safety and regulatory requirements necessary for product certification. The wide scale deployment of these ML-enabled products cannot come soon enough.

Joint innovation, solving for customers’ real-life systems and applications

Advanced machine learning technology is coming just in the nick of time as customers are eagerly awaiting smart products to address the “New Normal” at the embedded edge. For example, SiMa.ai’s customers are building robots and cobots with a safe human machine interface that can support computer vision and complete machine learning compute at <20W, as opposed to the 100-300W that is needed today. Our customers are also building intelligent electrical systems for semi-autonomous and fully autonomous cars that have machine learning compute at <10W (L2+) and <100W (L4-5), as opposed to 1000-3000W with alternatives and surveillance and medical products that perform all the ML compute and analytics in <5W.

Final Thoughts

We started SiMa.ai with the thesis of wanting to help solve these issues for our customers. Two years into this journey, we are excited to play our part in enabling the widespread scaling and adoption of ML in embedded edge applications.

As recently announced, we are deepening our software and hardware technology bench by adding a new design center in India. This expansion of our talent – beyond the teams we have in Silicon Valley, Ukraine, and Serbia, will give SiMa.ai the solid foundation to stay on course and meet the needs of our customers as we all adapt to the “New Normal”.

The pandemic has catalyzed an acceleration in innovation and technology adoption that together will improve our quality of life. I’m optimistic about 2021 and with our team feeling grounded on the opportunities in hand.

[1] https://www.nytimes.com/interactive/2021/01/15/climate/electric-car-cost.html

[2] https://www.amazon.com/Amazon-Prime-Air/b?ie=UTF8&node=8037720011