SiMa.ai’s MLSoC Development Kit

Highlights

SiMa.ai’s MLSoC Development Kit contains everything you need to evaluate, prototype and demonstrate your computer vision ML applications on the developer board using the contained MLSoC purpose-built silicon from SiMa.ai. The Development Kit combines a compact Developer Board based upon our HHHL PCIe production board that has been modified to expose interfaces utilized by developers and accommodates operation stand-alone on a lab bench environment as well as able to embed in a PCIe platform. This on-bench or in PC configuration options offer flexibility to support different developer profiles.

*Online purchasing is US-only. To buy outside the US, please fill out the form here.

Edge ML Developers Quick Evaluation, Prototyping and Demonstration of edge ML use cases with an all- inclusive kit:

Out-of-Box

Developers want to get the tools and hardware up and running quickly so they can evaluate and learn the new SiMa.ai edge ML platform. The Developer Kit provides an Out-of-Box set of all components that you need and a guide to make this a breeze and let developers focus on their evaluation.

Evaluate

The first step an ML developer will want to do is establish the ML model performance and accuracy on a target platform and assess the capabilities, time and effort in compiling models of interest. Palette™ provides quantization and compilation of a developer’s model using Palette’s compiler. Utilize Palette’s silicon software image build and deploy tools to program the developer board. The developer kit provides the ability to execute these builds and provide KPIs such as; frames per second (fps), latency, accuracy, % loading of compute resources and memory footprint. Palette, running in a Docker on a desktop, supports ARM cross compilation with libraries to generate code for the SoC, a platform build, test and deploy tool suite to create complete ML applications.

Prototype

The next step an ML developer will want to do is integrate this model into a potential application or use case, including ML model pre and post processing functions. To accelerate the prototyping phase, Palette adds the ability to quickly code, build and evaluate a pipeline using your own ML models. Developers can Python scripting using SiMa APIs for the functional pipeline, avoiding the complex embedded optimization often needed for on-device execution. These APIs bind your Python code to the device execution environment and will execute on device as a complete functioning pipeline. Pushbutton build and deploy loads this image into the Developer board for execution. This proof-of-concept application can validate the functionality of the pipeline as well as provide an early demonstration vehicle.

Demonstrate

Quickly bring a real-time data stream to the platform, execute a pipeline on this data stream and display the performance results in real-time running on the MLSoC silicon for use case demonstrations. GStreamer example pipelines included in the Palette software release can be run out of the box to demonstrate real-time streaming performance. Customer pipelines can leverage these pipeline designs that take advantage of GStreamer to get higher utilization of compute resources and higher fps. The developer board processes the pipelines and displays the metadata overlaid on the host PC. Add additional cameras to provide multi-camera processing capabilities.

MLSoC Developer Board:

The MLSoC Developer Board is based upon our PCIe half-height, half-length production board but is modified to operate on a lab bench environment with access to is a versatile board that uses the SiMa.ai Machine Learning System on Chip (MLSoC) device.

Some of the key features are:

- On-bench: PCIe edge connector covered in protective coating and a plate with four footings under the board, a micro- USB connector provides power for operation on a lab bench.

- In PC’s: The PCIe form factor (68.9mm x 160mm) using a standard 98-pin PCB edge connector to slot into any standard host PC or motherboard. A bracket is included to assist in securing to a PC.

- Low power board. Typical workloads 10-15W. Supports PCIe Gen 4.0 up to x8 lanes, LPDDR4 x4, I2C x2, eMMC, µSD card, QSPI-8 x1, 1G Ethernet x2 ports via RJ45, UART x2, and GPIO interfaces.

- Machine learning accelerator (MLA) – providing up to 50 Tera Ops Per Second (50 TOPS) for neural network computation.

- Application processing unit (APU) – a cluster of four ARM Cortex-A65 dual threaded processors operating up to 1.15 GHz to deliver up to 15K Dhry stone MIPs, eliminates the need for an external CPU or PC host.

- Video encoder/decoder – supports the H.264/H.265 compression standards with support for baseline/main/high profiles, 4:2:0 sub sampling with 8-bit precision. The encoder supports rates up to 4Kp30, while the decoder supports up to 4Kp60.

- Computer vision unit (CVU) – consists of a four core Synopsys ARC EV74 video processor supporting up to 600 16-bit GOPS.

- Incorporated for optimized execution of computer vision algorithms used in ML pre and post processing and other pipeline processing functions.

Contained in Development Kit 2:

- Developer Board (HHHL)

- Type C Power adaptor & Type C to micro USB – cable

- Ethernet Cable

- UART Cable to USB

- PCIe Mounting Bracket

Palette Software

- Seat License

Ordering information:

- SKU# MLSOC-DEV-16GB-200-AB

Development Kit 2 Camera Bundle:

Development Kit 2 with Camera bundle to demonstrate GStreamer ML pipelines in real-time

RouteCAM_CU20 – Sony® IMX462 Full HD GigE Camera

- Power-over-Ethernet camera with IEEE 802.3af compliance

- Comes with PoE power adapter and cable

- Houses Sony® Starvis IMX462 CMOS image sensor

- Ultra-low light sensitivity & Superior near-infrared performance

- High Dynamic Range (HDR)

- On-board high-performance ISP

- Supports 10Base-T, 100Base-TX and 1000base-T-modes

Developer Kit Bundled with 1GB Ethernet Camera

Ordering information:

-

SKU# MLSOC-DEV-16GB-200-ABA

MLSoC Developer Kit On-site Accelerate

On-Site Accelerate delivers a customized face-to face session with a SiMa.ai technical expert on the Developer Kit Bring-up and training on the Palette software to compile, build and deploy Python and GStreamer based pipelines on the Developer Board Incorporate your whole team to get up to speed quickly and be able to ask questions real-time as you walk through the development process.

Ordering information:

-

On-Site Accelerate – DevKit bring-up and training:

MLSOC-DBBU-SW-Training-OA

Available Direct and through the following Distributors:

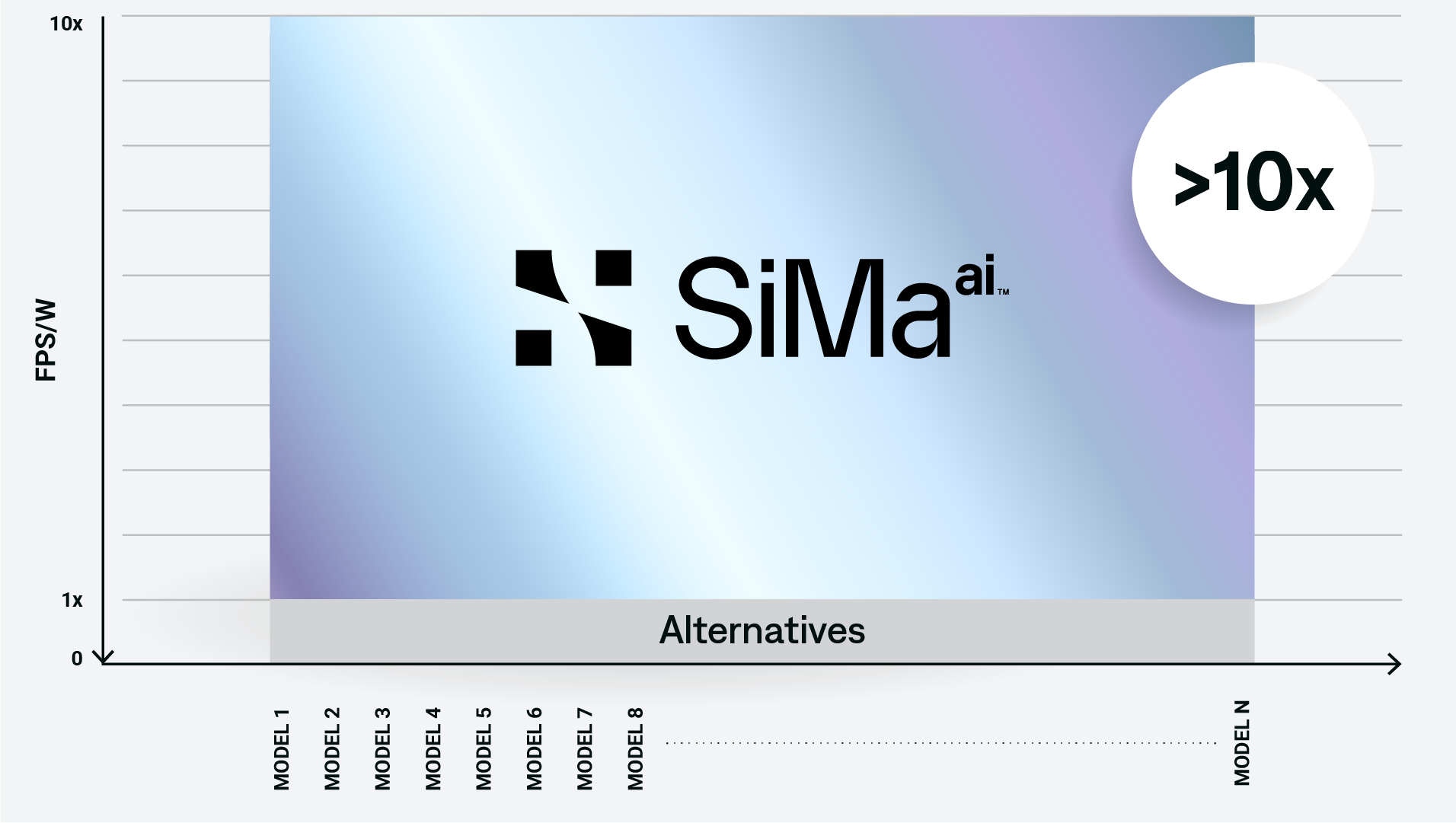

10x better than alternatives

Amazing power efficiency. Blazing-fast processing. Get immediate results that are 10x better performance per watt than the competition. Enables real-time ML decision making while addressing performance, space, power, and cooling requirements. The SiMa.ai MLSoC™ provides the best performance per watt because it’s purpose-built for the embedded edge market, not adapted for it.

HERE’S HOW WE DO IT

To address customer problems and achieve best-in-class performance per watt, we knew a software-centric machine learning solution that works in conjunction with a new innovative hardware architecture would be required.

Our radically new architecture leverages a combination of hardware and software to precisely schedule all computation and data movement ahead of time, including internal and external memory to minimize wait times.

We designed only the essential hardware blocks required for deep learning operations and put all necessary intelligence in software, while including hardware interfaces to support a wide variety of sensors.

Any computer vision application

Whether you’re building smarter drones, intelligent robots, or autonomous systems, the SiMa.ai platform allows you to quickly and easily run any neural network model from any framework on any sensor with any resolution for any computer vision application. Run your applications, as is, right now on our MLSoC™.

HERE’S HOW WE DO IT

Our MLSoC Software Development Kit (SDK) is designed to run any of your computer vision applications seamlessly for rapid and easy deployment .

Our ML compiler front end leverages open source Tensor Virtual Machine (TVM) framework, and thus supports the industry’s widest range of ML models and ML frameworks for computer vision.

Bring your own model (BYOM) or choose one of our many pre-built and optimized models. SiMa.ai’s software tools will allow you to prototype, optimize, and deploy your ML model in three easy steps.

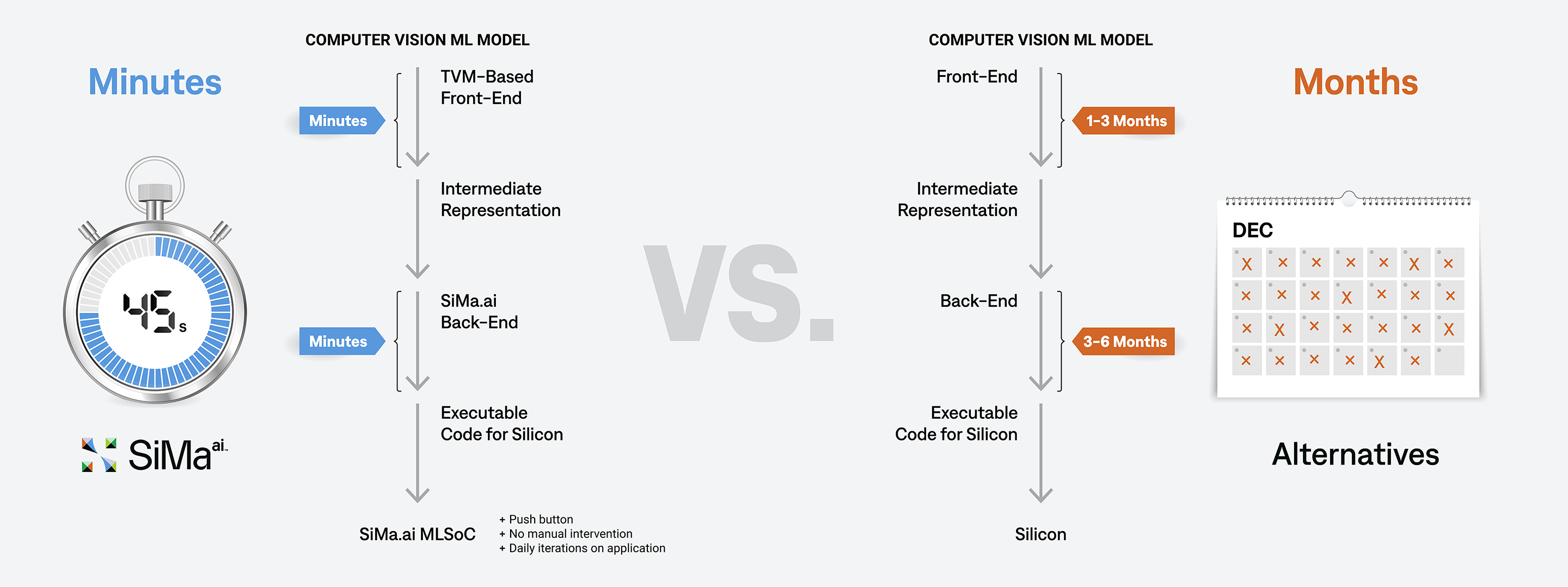

Push-button results

The ML industry is silicon-centric. Porting an ML application to a new platform is hard and time consuming and performance gains are uncertain. At SiMa.ai, we co-designed the software and hardware from day one. As a result, our industry-leading software suite allows you to get results, all within a matter of minutes with a simple push of a button and without the need to hand optimize your application – saving you months of development time.

HERE’S HOW WE DO IT

We listened to our customers and it was clear that we had to make the software experience push-button. Our innovative software front-end automatically partitions and schedules your entire application across all of the MLSoC™ compute subsystems.

Customers can leverage our software APIs to generate highly optimized MLSoC code blocks that are automatically scheduled on these subsystems. We created a suite of specialized and generalized optimization and scheduling algorithms for our back-end compiler. These algorithms automatically convert your ML network into highly optimized assembly code that runs on the Machine Learning Accelerator (MLA). No manual intervention needed for improved performance.

Your entire application can be deployed to the SiMa.ai MLSoC with a push of a button. Build and deploy your application in minutes in a truly effortless experience.