Software is Powering the AI Revolution

This isn’t the first time artificial intelligence (AI) has been all the rage and set to change the world. However, the technology that underpins AI has fundamentally changed. A decade ago, not many had heard of deep learning. This is the underlying technology that helps you find pictures of the kids at the beach on your phone, and helps Alexa and Google Assistant understand what you speak and has become more creative lately, generating pictures of people and whatever else you ask for.

Resurgence of AI

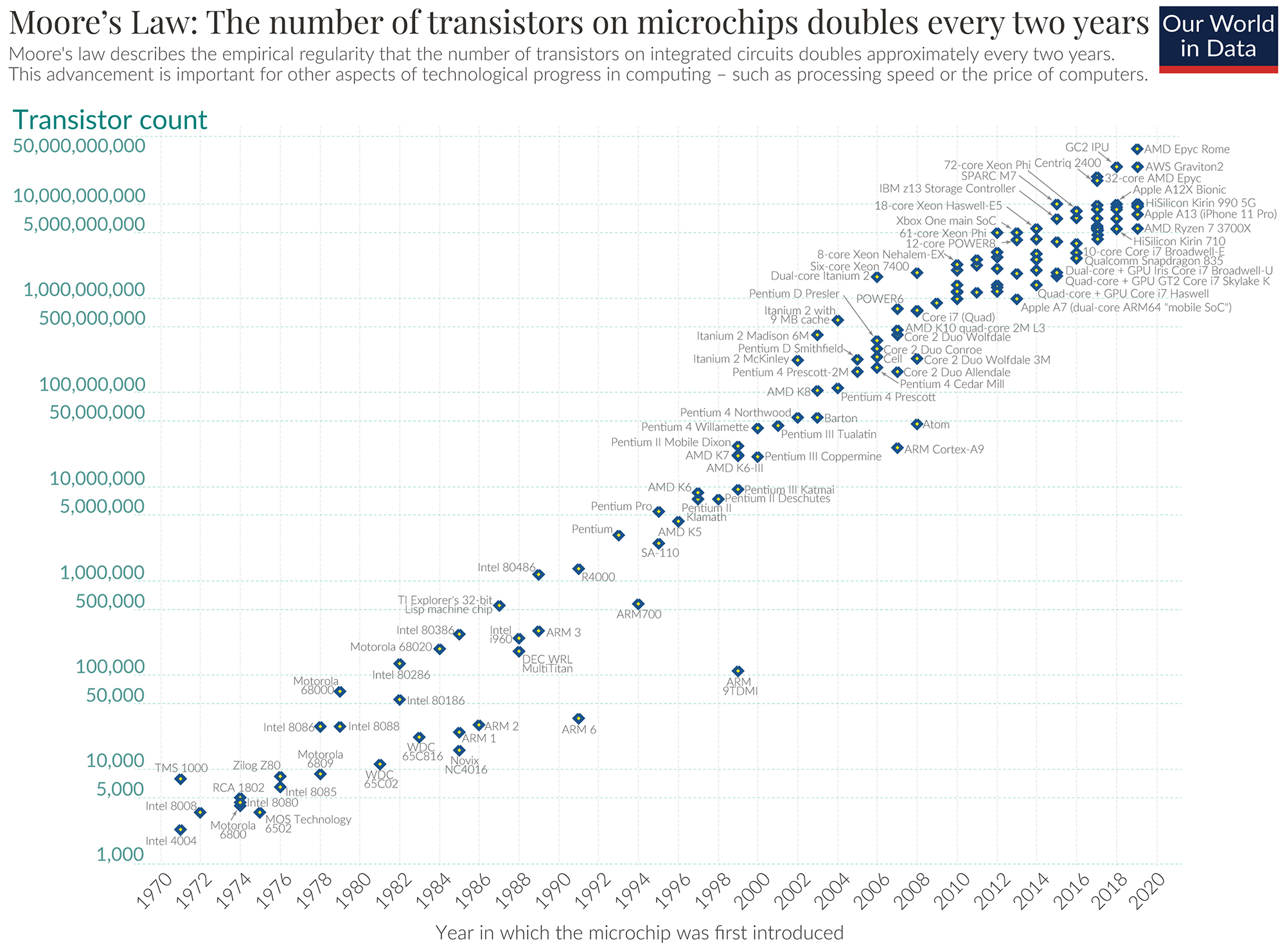

So, what changed in the last decade? Three key technology elements came together to create this success: algorithms, data, and compute. Neural networks came of age, rechristened to deep learning, thanks to the over 50 zettabytes of data being generated every year by the four billion people connected to the internet. This learning doesn’t come cheap, and the strong growth in computational power between 1970-2010 (about 1,000,000 times the number of transistors per chip) went a long way in making this possible.

(Source: Our World in Data, By Max Roser, Hannah Ritchie)

Role of Compute

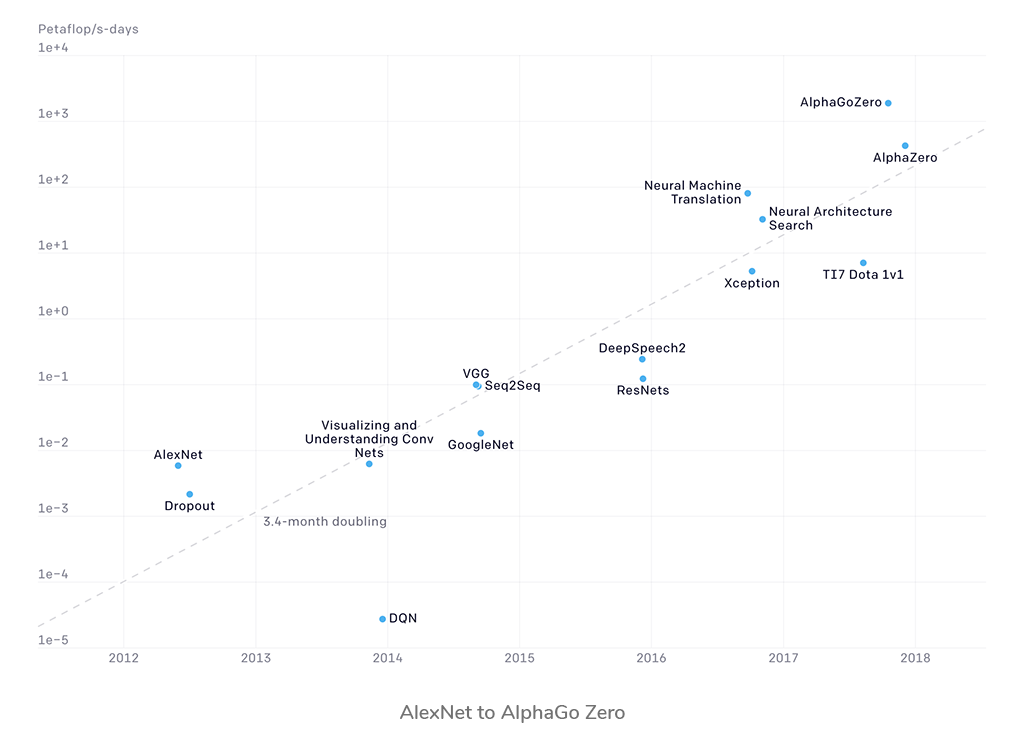

The graph below shows the growth of AI with many of the key models over the last decade plotted on the year they were published with the computation required. The fast improvement in the research lines up with an exponential growth in computational power (2x every 3.4 months on average) over the same time, going from AlexNet to AlphaGo Zero with over a 100,000x increase. In fact, GPT-3 (not listed here) is estimated to have taken about $4.6 million to train on ~350 years of the fastest GPU (V100) available at the time. None of these would have been possible without the advancements in hardware and the software to program them.

(Source: OpenAI https://openai.com/blog/ai-and-compute/)

These advances in AI are being leveraged in the products we use on a daily basis. While the computational resources needed for inference are often significantly lower, computational cost continues to be a limiting factor. This has led to a big business opportunity with countless hardware companies working to build new hardware to lower this cost. These efforts are split across large scale data centers, all the way down to tiny microcontrollers — each of which have their own requirements and are driving many different design spaces for these vendors to go after.

Software as an Afterthought

The one important piece that is often forgotten in all of this is the software.

Having been at the center of this resurgence of AI at Google Brain, I saw firsthand the groundbreaking research driven by amazing ML researchers like Andrew Ng, Quoc Le, and Samy Bengio with the collaboration of many people from different backgrounds together in one room. Their ideas were enabled by scaling them up to run on the thousands of machines leveraging DistBelief, which was our first internal software for deep learning — an effort driven by Jeff Dean, Matthieu Devin and myself, along with a number of other folks.

Beyond the earliest runs across thousands of CPU machines, this same software allowed us to train these models on hundreds of GPGPUs (which were much more optimized for such computation), and eventually run on Google’s custom-built TPUs. By the time we got to TPUs, we ended up rebuilding the software from scratch (now known as TensorFlow) to take advantage of the newer hardware and set the stage for the next generation of algorithms. This also allowed us to bring the fruits of this research to the wider world beyond research and it is now available to everyone.

Often one mistake made by folks building hardware is to drive an amazing new chip design, delivering exponentially more computational flops than anything else out there, but without the software to enable it. If there’s one strong reason for NVidia’s success in AI with GPUs, it’s the fact that their CUDA software stack can integrate with popular ML software like TensorFlow. Sadly, not many companies have learned from this example.

What is really needed for new hardware is the entire stack that allows users to run their existing models (with little to no change) on the software. What matters is the performance delivered for these models, not the benchmark performance on artificial workloads such as general matrix multiplies.

Many people tend to look at AI and ML with a silicon-centric outlook. In reality though, it’s really a software-centric outlook that needs to guide the silicon. This is a huge learning curve for companies and one that SiMa.ai has already figured out. Their “Software-First” customer experience promises the widespread adoption of ML at the embedded edge by supporting any resolution and any frame rate for any neural network model and workload by leveraging efficient compilation by the software for an effective deployment on their purpose-built machine learning device.

Opportunities for AI

People often ask what area AI is going to impact and the short answer is – everything. As an example, software has become an inextricable part of today’s products and AI will go beyond. The first wave of applications we are seeing today is where AI leads to significant innovation, especially ones that have traditionally been hard for automated systems like image recognition and natural language understanding.

There’s been a large number of opportunities and businesses applying smarter image understanding capabilities to new domains. With huge amounts of new data being generated all the time, especially for images and videos, processing them at the edge is vital. Access to faster computational power with ML accelerators, especially at the edge is enabling many new applications. There are opportunities for companies building new hardware and also building and providing end-to-end solutions in domains such as physical security. SiMa.ai’s machine learning SoC (MLSoCTM) platform environment, for example, solves the complexity of using discrete ML hardware accelerators, vision processors, and applications processors by offering a single-chip solution for embedded edge inference needs.

With the rapid improvements in natural language understanding over the last 2-3 years, there is a growing interest in innovative applications that unlock the large troves of text documents that every business has accumulated since the advent of digitization. In this case the bottleneck is less about data size, since text compresses quite well, but the model size (e.g. GPT-3) has 175 billion parameters thus requiring 700GB just to load the model into memory. These models are much more likely to run in the data center (cloud) and leverage ML accelerators there.

The paradigm for interaction with computers is also evolving rapidly. With speech and natural language being more understandable by machines, new applications beyond the assistants are becoming possible. These are a great boon for broader accessibility for folks with differing abilities.

During the past year, with many of us spending large portions of our times online, the application of AI to technologies such as AR and VR is helping accelerate both of them and our integration with the virtual world. Wouldn’t a VR experience be a lot better than Zoom this year?

The biggest attention with AI has been given to unstructured data such as images, speech and text, as these types of data have gone from barely understandable to human-level understanding. There are still huge volumes of data sitting in and being generated every day from all the applications consumers and businesses use. Unnoticed by many, deep learning has provided a huge step forward in this area and is creating an opportunity for our world to truly become data driven.