Edge MLOps: Architecture, Challenges, and Platform

Table of Contents

- 1. Introduction

- 2. Edge MLOps Architecture

- 2.1 Field Management

- 2.2 Model Continuous Improvement

- 3. Edge MLOps Challenges and Platform Comparison

- 4. Edge MLOps Reality and Gaps

- 5. SiMa.ai’s Criteria for Effective MLOps

Introduction

In the realm of technology, the goal of operations is to ensure the continuous and efficient functioning of production systems. As Machine Learning (ML) gains popularity and ML models are deployed in production environments, the concept of MLOps emerges as an essential practice. With the rise of the Internet of Things (IoT) and AI-enabled edge devices, a parallel framework, Edge MLOps, also becomes necessary. The proliferation of smart devices at the edge introduces unique challenges, including latency, connectivity challenges, handling a large number of devices, and the requirement for real-time processing. Consequently, Edge MLOps focuses on extending the principles of MLOps to these edge environments, ensuring robust and efficient operation with minimal human intervention.

In essence, Edge MLOps seeks to create a resilient and scalable ecosystem where machine learning models can thrive, even at the outermost peripherals of a network. By incorporating automation and reducing the need for direct human involvement, Edge MLOps facilitates efficient data management and models continuous improvement of sophisticated AI systems on edge devices.

Getting MLOps right from the start is crucial for ensuring machine learning operations' scalability, reliability, and efficiency as they grow. A robust automated MLOps pipeline enables seamless continuous model improvement. It prevents accuracy degradation caused by data drift—a challenge inherent to machine learning systems that differs from traditional software systems.

There are numerous MLOps articles and blogs available online. For instance, the Neptune MLOps blog offers an exhaustive list and comprehensive summary of numerous MLOps platforms. However, very few sources address the entire end-to-end architecture, data flow, and all components of MLOps. From the Neptune blog, it's clear that most MLOps platforms cover only limited capabilities. Hence, we have created this blog to detail the architecture, challenges, and gaps of MLOps and our criteria for effective Edge MLOps solutions.

Edge MLOps Architecture

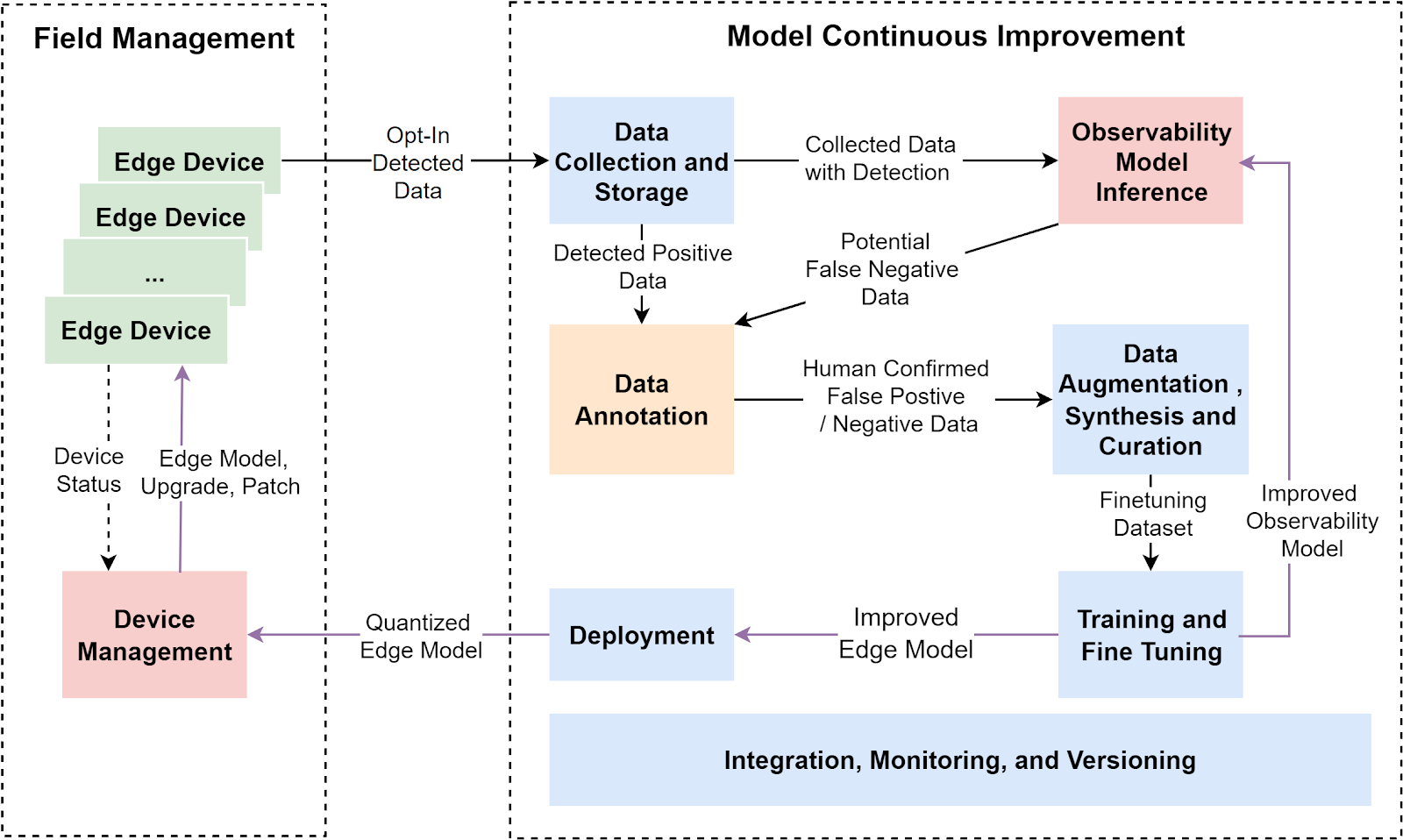

Diagram: Edge MLOps Architecture

The above diagram illustrates Edge MLOps components and architecture. The system is broken into two main areas: Field Management and Model Continuous Improvement. Here's a detailed explanation:

2.1 Field Management

Edge Device:

- Edge devices are deployed in the field to perform real-time processing with the deployed model and collect data for model monitoring and improvement.

- Edge devices may rely on batteries and often face unreliable network connections. Additionally, the number of edge devices can range from 1 to 10s of thousands in any given deployment.

Device Management:

- Monitors the status of edge devices (e.g., health, performance).

- Sends edge model updates, upgrades, and patches to maintain the devices' functionality and security.

- Receives improved edge model and deploys it to the edge devices.

2.2 Model Continuous Improvement

Data Collection and Storage:

- Collects and separates model inference positive and negative data from the edge devices.

- Stores opt-in data along with model inference results.

- The model inference data, whether confirmed positive or exhibiting low confidence, is sent for review, confirmation, and annotation.

- It is essential to manage the collection of data across unreliable networks and a vast number of devices.

Observability Large Model Inference:

- Infer collected data with Observability Large Models and compare with Edge Model inference result to identify potential false negative data and model drift.

Data Annotation:

- Human annotators review, confirm, and annotate data identified as low confidence, false positives, or false negatives.

Data Augmentation, Synthesis and Curation:

- Processes potential low-confidence, false positive, and false negative data that may have been annotated and confirmed by humans.

- Performs data augmentation and synthesis to increase data variation.

- Curates the dataset to fine-tune and improve the models.

Training and Finetuning:

- Combine existing datasets and fine-tuning datasets to train and finetune the model.

Validation and Deployment:

- Compress, prune, and quantize the improved model for deployment on edge devices.

- Evaluate and validate that the accuracy and inference performance of the new model exceeds those of the previous model.

Integration, Versioning, Monitoring, Dashboard, Review, and Approval:

- Integrate all components by facilitating data transfer, messaging, and queuing.

- Implement version control for all components.

- Establish monitoring, dashboards, and review and approval processes for every component.

Edge MLOps Challenges and Platform Comparison

If things fall apart due to faulty MLOps, there is often a substantial cost associated with rectifying issues such as model failures and system downtimes. Time and productivity are also severely impacted. Furthermore, a faulty MLOps framework can derail an entire implementation by causing missed business opportunities, degraded model performance, and lost stakeholder confidence. In extreme cases, critical decision-making systems reliant on machine learning might produce inaccurate or biased outcomes, leading to further financial and reputational damage.

Implementing an Edge MLOps practice faces several critical challenges, including:

- Limited Features in Commercial-off-the-shelf MLOps Platforms: Many commercial off-the-shelf MLOps platforms focus on a limited scope, providing only a restricted set of features, which makes it challenging for them to support all essential components required for an end-to-end solution. The most significant gaps of the Commercial-off-the-shelf MLOps platforms include the lack of the Observability Large Model component, inadequate integration of all components, and insufficient device management capabilities.

- Specialized Expertise Required: Building infrastructure and components, and integrating the necessary pipeline for these platforms demands extensive technical skill sets, expertise, and significant effort.

| SiMa.ai | Roboflow | Amazon SageMaker | DeepEdge | Edge Impulse | |

| Model Training | ✓ | ✓ | ✓ | ✓ | ✓ |

| Versioning | ✓ | ✓ | ✓ | ✓ | ✓ |

| Data Annotation | ✓ | ✓ | ✓ | ✓ | |

| Monitoring | ✓ | ✓ | |||

| Data Augmentation | ✓ | ✓ | |||

| Deployment | ✓ | ✓ | ✓ | ||

| Data Collection and Storage | ✓ | ✓ | |||

| Integration | ✓ | ||||

| Observability Large Model Inference | ✓ | ||||

| Device Management | ✓ |

| ✓ indicates the described feature is available |

Table: Comparison of Representative MLOps Platforms

The above table is a comparative analysis of several representative MLOps platforms based on the various functionalities they offer. There are more types of MLOps platforms. Some types (e.g. Labelbox, Scale AI) focus on data annotation. Some types (e.g. Weights & Biases, MLFlow) focus on experiment monitoring and versioning. Most MLOps platforms have no device management feature which is essential for Edge MLOps. The SiMa.ai ONE Platform for Edge AI provides comprehensive end-to-end MLOps capabilities, especially for critical Integration, Observability Model Inference, and Device Management.

Edge MLOps Reality and Gaps

In one sense, computer and information technology revolves around automation and reducing manual labor. Yet, we've added layers and abstractions to conceal the underlying complexity, from chip to board, operating system, SDK, API, application, and SaaS. Currently, the operations of most ML systems remain highly manual, except for only a handful of tech companies that need to manage numerous models, have in-house expertise, or have the luxury to invest to integrate, automate, and optimize these pipelines. Current common practices involve building and integrating MLOps pipelines using tools like Kubernetes, Airflow, Argo Workflows, Kubeflow, etc. These tools require specialized data and software engineering skills. Therefore, there are significant gaps in the industry for enabling most companies, which may lack software engineering expertise, to swiftly implement MLOps and newer Edge MLOps.

SiMa.ai addresses these gaps by integrating all end-to-end Edge MLOps capabilities, eliminating MLOps complexity, and making it as simple as possible, thus filling the industry's need for accessible, efficient solutions, especially for companies with limited software engineering capabilities.

SiMa.ai’s Criteria for Effective MLOps

At SiMa.ai, we define effective MLOps by our dedication to providing an all-encompassing, integrated solution that addresses every aspect of machine learning workflows at the edge. Our criteria for effective MLOps encapsulate:

- Comprehensive Feature Set: Our platform supports a full spectrum of features necessary for MLOps, eliminating the need for multiple disjointed tools. This includes Data Storage, Model Re-training, Versioning, Data Annotation, Monitoring, Data Augmentation, Deployment, Integration, Observability Model Inference, and Device Management.

- Ease of Use: We prioritize simplicity and user-friendliness without compromising on functionality. Our intuitive interface and seamless integration capabilities ensure that teams can rapidly deploy, monitor, and manage their machine learning models, regardless of their technical expertise.

- Scalability and Flexibility: Our solutions are built to scale, adapting to the needs of low power consumption, unstable connections, numerous edge devices, growing datasets, and increasing model complexities. Whether you are a startup or a large enterprise, SiMa.ai can accommodate your MLOps requirements efficiently.

- End-to-End Pipeline Integration: SiMa.ai excels in integrating all aspects of the machine learning pipeline, from data collection and preprocessing to model deployment and inference. This holistic approach ensures continuous and smooth operation, reducing downtime and operational inefficiencies.

- Advanced Observability and Monitoring: We provide real-time observability and monitoring tools to ensure optimal model performance and swift identification of issues. This minimizes risks and ensures the reliability of machine learning applications in production environments.

- Robust Security and Compliance: Maintaining the highest standards of data security and regulatory compliance is a cornerstone of our platform. SiMa.ai ensures that your data and models are protected, adhering to relevant industry standards and practices.

With these stringent criteria, SiMa.ai sets itself apart in the competitive MLOps landscape. Our commitment to delivering a superior, comprehensive, and user-friendly MLOps platform invites you to experience the difference. By adhering to these unparalleled principles, SiMa.ai not only addresses the challenges in edge MLOps but also positions itself as a leader in fostering innovation and operational excellence in the field.

We encourage you to contact us to explore the unique advantages SiMa.ai offers and discover how we can empower your edge MLOps strategy, transforming your initiatives into remarkable successes with ease and efficiency.