Machine Learning is Accelerating the Commercialization of Robotics and Autonomous Systems

Robotics and autonomous systems (RAS) are showing up everywhere in our lives, from cleaning systems to order delivery and now even robotic pets that improve senior citizens’ mental health. These RAS offerings include service robots bringing benefits to humanity and industrial robots that are enhancing job safety by performing the more dangerous aspects of certain jobs. Though the number of RAS products continues to ramp, there are still many areas needing further development and subsequent certification, such as with autonomous vehicles and drones, prior to RAS deployments becoming ubiquitous.

A key catalyst to increasing RAS adoption is the transition from automated systems to autonomous ones. Automated systems still have a human in the loop, either locally or remotely, managing system behavior [ Reference Link]. The human involvement needed for these systems limits how many can be deployed. On the other hand, autonomous systems are fully self-contained and can complete a complex mission without any human involvement. Given this, autonomous systems can more easily scale in much greater numbers. The key then becomes what needs to be added to an automated system to make it autonomous? This is where Machine Learning (ML) comes in — ML is the key technology needed to make automated systems into autonomous ones.

What is needed to catalyze the explosion of RAS product adoption?

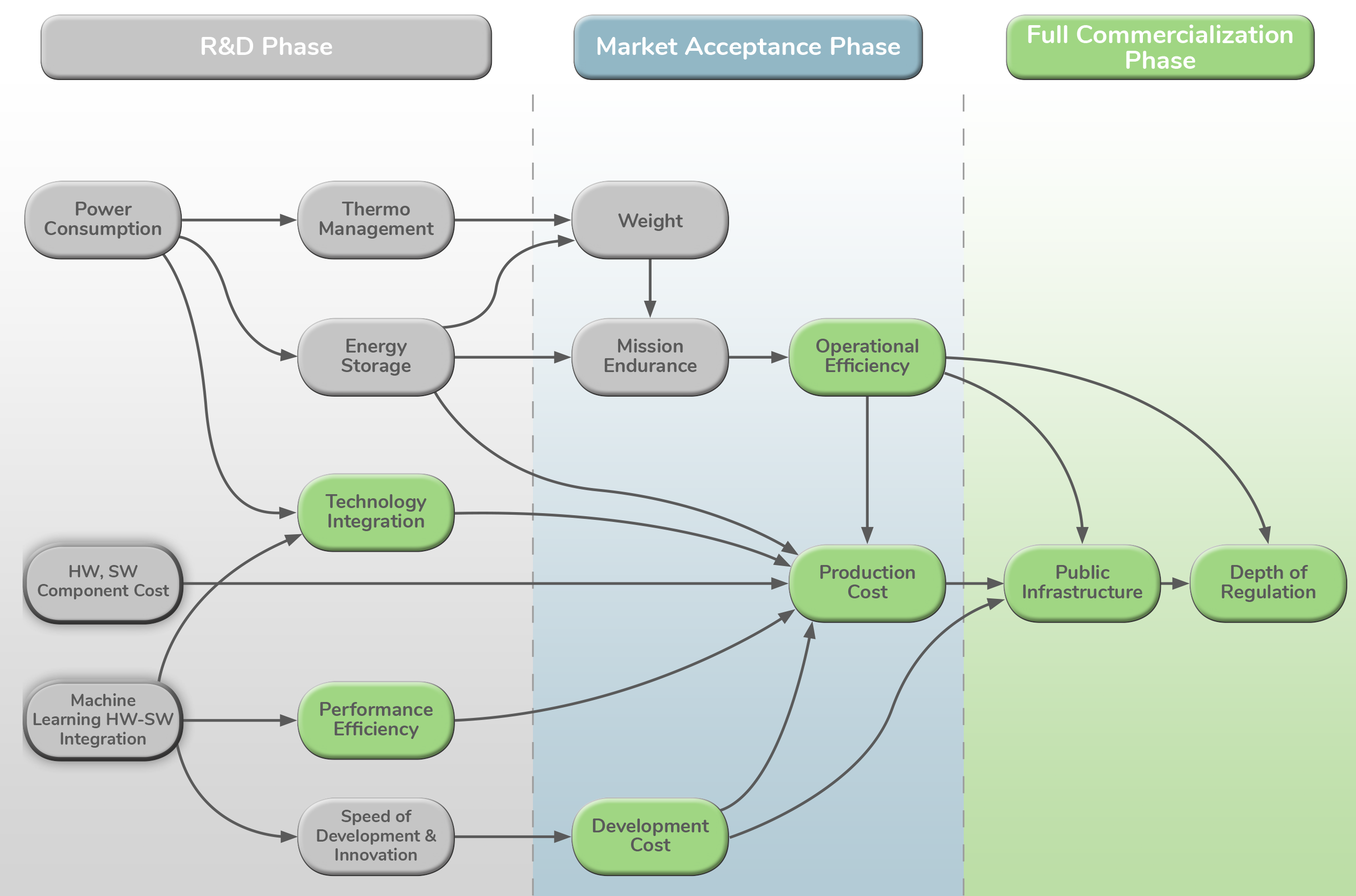

There are multiple facets needing to be addressed to enable a vast increase in RAS product adoption. The most important ones with respect to the ML capabilities of the RAS products are development costs, production costs, performance efficiency, operational efficiency, technology integration, public infrastructure integration and depth of regulation. We will discuss each one of these below.

- Development costs depend on availability and affordability of resources. This includes the availability and maturity of an affordable talent pool along with hardware, software, and system integration tools. Currently there is a shortage of ML experts capable of using these complex tools. To mitigate this talent shortage, the software and hardware tools need to be very easy to use straight out of the box and flexible enough to support a variety of ML networks, frameworks and operators.

- Production costs, driven primarily by supply chain costs, need to be low enough to support pricing that the market will bear. The production cost curve hits an inflection point as volumes increase, which drive down unit costs along with increasing the alternative options available within the supply chain. However, achieving a low production cost is not the only factor that will drive adoption as there is also a dependency on the engineering and manufacturing teams being able to easily use the ML components in their RAS products. For example, if the engineering team is unable to adopt a lower cost device due to the complexities of the ML, then a low production cost is irrelevant. Hence, low production costs along with ease of use of the ML products go hand-in-hand to drive adoption.

- Performance efficiency needs to meet a minimum efficiency threshold, which is highly dependent on algorithm maturity and hardware performance efficiency. Algorithm maturity is achieved when the algorithms have converged enough to transition out of the R&D phase into production with extended coverage in many domains. Hardware performance efficiency is achieved when there are more aggregated functions to support the autonomy stack with lower SWaP-C (size, weight, power, and cost) that supports the desired computation capacity. Performance efficiency is tightly correlated to the level of technology integration as efficient algorithms will have poor performance if the wrong hardware is used. To prevent this poor performance, hardware-software co-design is required for complex development in the machine learning domain.

- Operational efficiency has multiple subdimensions that affect performance. The subdimensions common to most autonomous systems are (1) Cloud backend service that supports the mission or operations; (2) Communication backbone or infrastructure that communicates with fielded autonomous systems; (3) Deployment infrastructure necessary to interface between OEM production and deployment to provide system updates; (4) Service ecosystem ensuring a scalable and sustainable operation for any autonomous operations. This includes retailers, repair shops, landing zones or parking areas, cleaning services, charging or refueling services, etc. (5) Safety for humans interacting with the RAS, which includes development and field operations, is one of the most critical aspects of the operation.

- Technology integration is tightly correlated to performance efficiency. The technology is defined to integrate both software and hardware, making it critical to have software-hardware co-design for more complex functional integration. Hardware integration, commonly referred to as SoC (System on Chip), integrates commonly used functions for ML, computer vision or perception, and image processing with fundamental building blocks like linear algebra accelerators such as the BLAS library. Software integration should be tightly coupled to the SoC to provide additional design capabilities to enable further integration into the overall system software design. Most of the autonomous systems in development are primarily running on software. In the fully commercialized phase, software is more of an application or set of applications running on a mature operating system with the rest of the applications being implemented in a dedicated hardware system.

- Public infrastructure integration to support autonomous systems lags behind due to economic incentive and necessity. Until there is a critical mass of demand that drives supply, the infrastructure piece will not be broadly available.

- Depth of regulation of autonomous systems early on is more to control high level goals with very little technical regulation due to rapidly changing technology and lack of understanding or deep insights into social impacts. This vagueness will continue until the industry starts to converge on technology and society starts to see more autonomous systems in people’s daily lives.

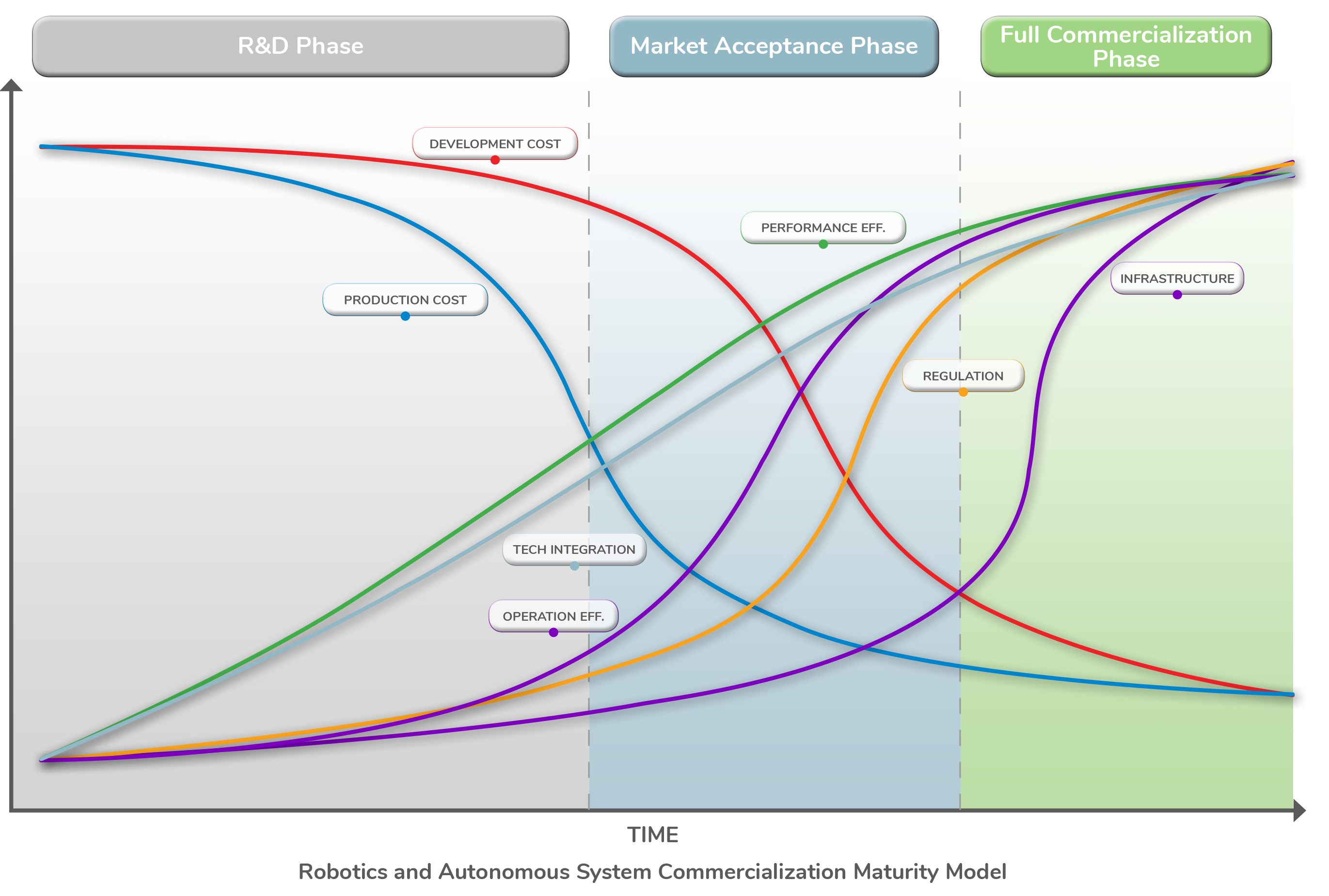

Machine learning hardware-software integration driving RAS market acceptance

A pattern emerges when the seven individual dimension’s curves are combined. It’s worth noting that most of the inflection and intersection points occur at the start or within the bounds of the Market Acceptance Phase. The curves for each dimension are subjective to the specific system within each industry. However, the generalized model here does provide a good representation of relationships between the dimensions. For example, Development Cost decreases at a slower rate than Production Cost, while Depth of Regulation and Infrastructure Integration significantly lag behind Technology Integration and Performance Efficiency. Operational Efficiency lags behind Technology Integration and Performance Efficiency. When most, or perhaps all, of the curves have made one or more intersections and passed their respective inflection points, Full Commercialization Phase is very much a reality, assuming the application use cases have found a solid landing during the Market Acceptance Phase.

With all the above stated dimensions, the current challenges with autonomous system designs are associated with limited operational design domain (ODD) coverage. This is the reason why only environmentally controlled, or very strictly controlled ODD autonomous systems, have been used in small scale production. Examples of these strictly controlled ODDs include warehouses, shipping docks, mining operations, small community-level transportation, and farms. Airborne autonomous systems have been tested under special exceptions from regulation authorities in limited flight zones. The key question here is — what is the root cause for these ODD limitations? To trace this to the root cause, one should “follow the data.”

Tracing to the root cause of limited ODD

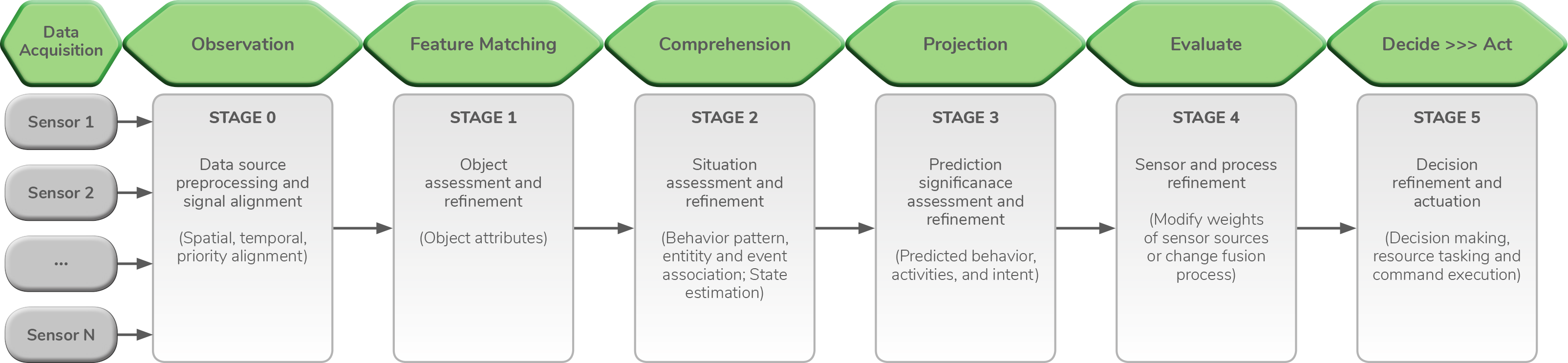

A complex decision is only as good as the information this decision is based upon. In the world of autonomous systems, this is quite true. Sensors provide the data and algorithms downstream from sensors process the data and ultimately refine these multiple stages of data into a set of decisions and actuations. A commonly used data processing stage pipeline shown below will be used for the basis of discussion.

In tracing the processing stages, one quickly observes that this pipeline is very similar to the OODA (observe–orient–decide–act) loop used in military strategies, as paraphrased on the top line tags. [Reference Link] Each stage of the sensor data fusion provides further refinement to support the final stage of decision and actuation. If this is a fairly well understood data pipeline, then what is causing the ODD limitation? There’s two ends of the spectrum — the raw data feed from the sensors and the decision making algorithms.

In most of the autonomous systems, the majority of the data processing and intelligence takes place in stages 4 and 5 as stages 0 through 3 are fairly low on intelligence. The sensors are used for only feeding raw data. This model works great for software-centric autonomous systems for multiple reasons, which are topics for another blog. There are, however, benefits to adding more intelligence to earlier stages to perform sensor data fusion. One benefit is to provide early warning indicators for possible regions of interest either to downstream processing stages with priority tags, or to a separate system responsible for handling immediate confirmation and possible actuation.

A high level trace would indicate that sensor capability limitations are a primary obstacle to increase ODD. The machine learning algorithms used in stages 4 and 5 are also a very significant limiting factor in current ODD. The good news is that both ends of the spectrum have a path forward towards the market acceptance phase and full commercialization.

Connecting the technologies toward commercialization

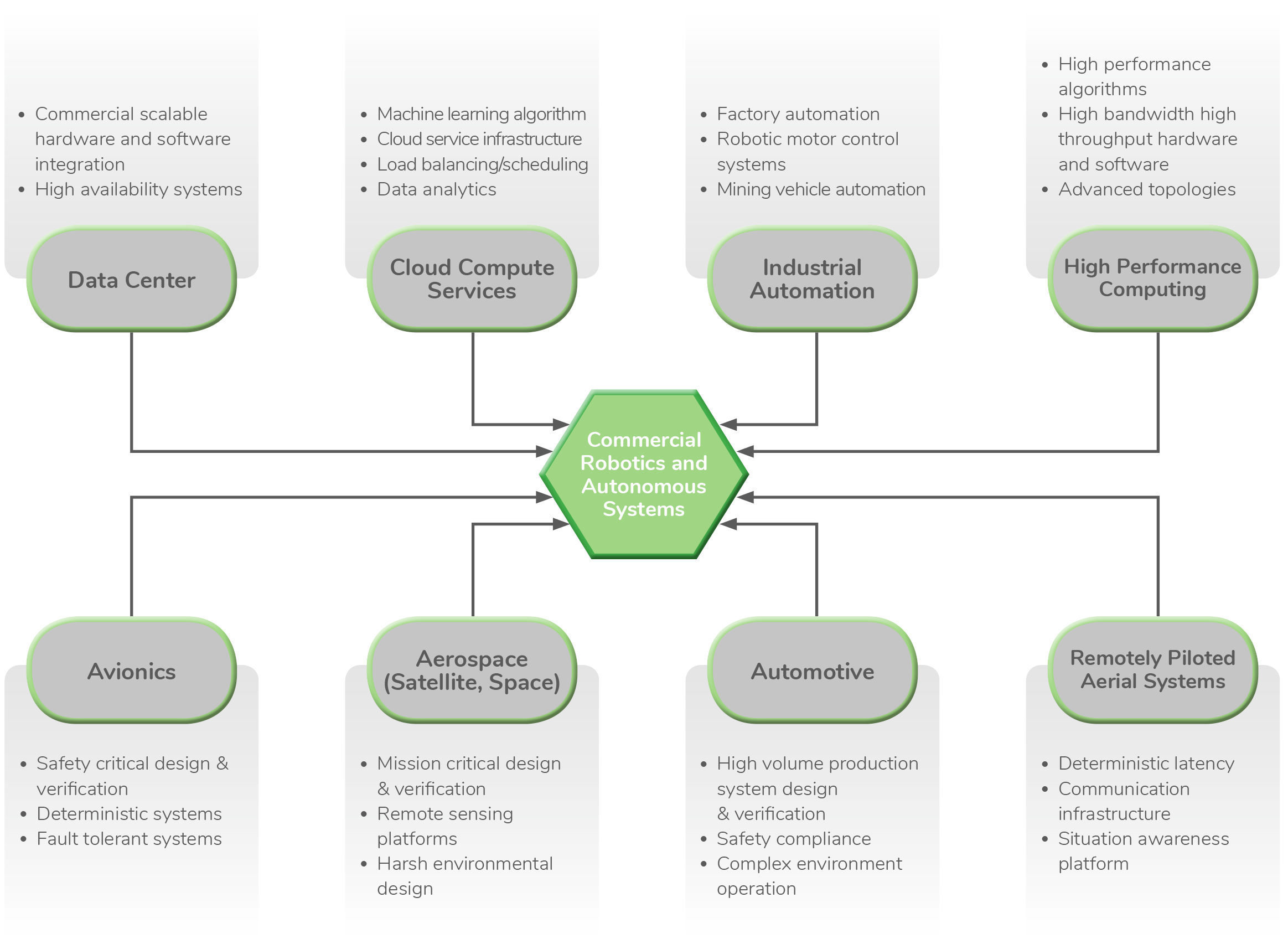

Progress and innovation are often built upon existing technologies. RAS systems such as unmanned aerial vehicles (UAV) and unmanned ground vehicles (UGV) are currently piloted remotely, hence the term RPAS (remotely piloted aerial systems). [Reference Link] The trend towards moving from automated operation to fully autonomous operation has driven the need for convergence of multi-domain expertise and integration into a RAS platform. Not only is it a convergence of technologies, but also engineering talents from multiple domains coming together to innovate. Some of the key subsystems to build an autonomous vehicle include:

- High spectral range sensors with a wide field of view and long ranges

- Sensor data fusion subsystem

- Localization and mapping subsystem

- Perception and prediction

- Path planning and intelligent decision-making

All of the above items contain elements from different industries. For example, to satisfy the compute bandwidth and capacity, the hardware platform borrows from the data center and high performance computing domain. The critical parameters directly relevant to the RAS product are:

- Peer-to-peer networking, which enables many options for network topology, options for fault tolerant system design, and options for load balancing and seamless scheduling

- System memory and processing bandwidth for data movement

- Deterministic communication for mission critical event handling

- Optimized processing units for machine learning operations

- Scalable hardware-software integration for performance

For ODD coverage, on-road testing is not enough, and a physics-based simulation is a must-have to extend the coverage as broad as possible. Though not directly deployed in the field, the simulation of the scenarios or ODD should be based on deployed systems including the hardware and software systems.

For ODD coverage, on-road testing is not enough, and a physics-based simulation is a must-have to extend the coverage as broad as possible. Though not directly deployed in the field, the simulation of the scenarios or ODD should be based on deployed systems including the hardware and software systems.

Connecting the various domain expertise into the RAS products from a technology perspective is informative. However, the dependency diagram connecting the core commercial capabilities provides additional insights into the commercialization path.

Vision of the future

In the near future, more and more machine learning hardware-software integration will happen at a commercial scale, further increasing the transition velocity into the Market Acceptance Phase. If artificial intelligence is viewed as the “spirit” of an autonomous system, then machine learning is a key building block towards true general intelligence.

In the near future, more and more machine learning hardware-software integration will happen at a commercial scale, further increasing the transition velocity into the Market Acceptance Phase. If artificial intelligence is viewed as the “spirit” of an autonomous system, then machine learning is a key building block towards true general intelligence.

In light of this, autonomous systems require breakthroughs in the perception technology domain, which is a combination of sensors and algorithms, to provide true situational awareness and immersion. With respect to the six stages of data processing illustrated earlier, sensor innovation could mean a multi-modal sensor with limited or integrated intelligence being used to aggregate stages 0 to 3 and provide later stages with object level data for decision making, thus reducing the downstream complexity by moving more functions into lower levels or closer to the metal.

Machine learning at the edge is where this transition will happen. SiMa.ai’s MLSoC™ and associated SDK provide the foundational elements for enabling innovative customers to address the three commercial capabilities required to accelerate the transition into the Market Acceptance Phase. By helping to enable this transition, SiMa.ai is paving the way to accelerate adoption of autonomous systems so the world can enjoy the great benefits of these ML-enabled RAS products much sooner!

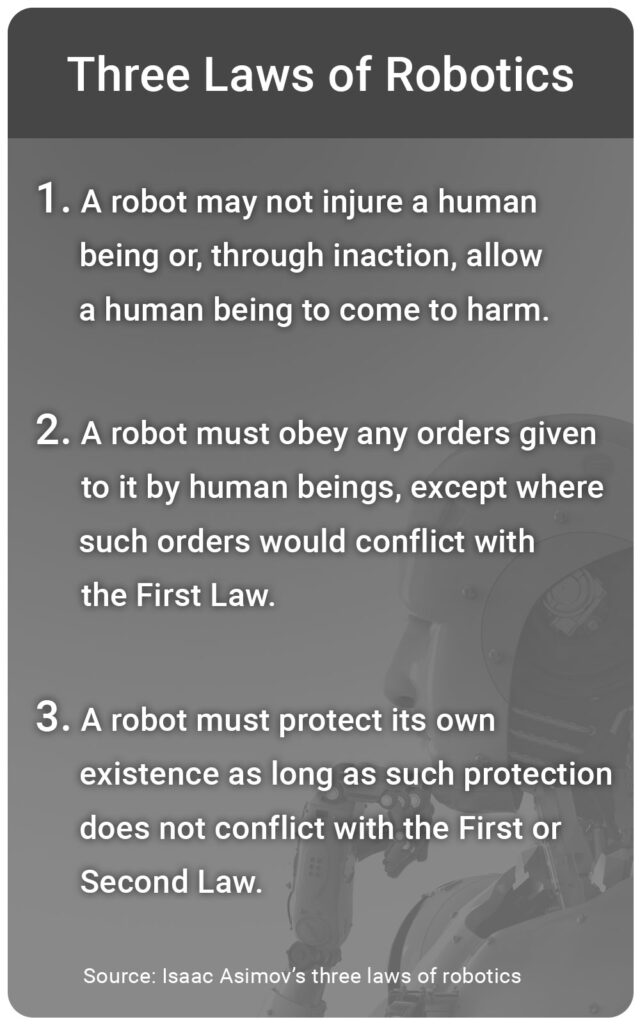

Perhaps one day soon, Isaac Asimov’s three laws of robotics will have to be implemented as part of artificial intelligence (AI) ethics requirements.

About the author: Ching Hu is a member of the technical staff at SiMa.ai covering robotics system architecture, functional safety, and system cybersecurity. His passion is all things robotics, as can be seen by his career trajectory where he worked on system design and architecture related to commercial avionics, aerospace & defense platforms, wireless communication, and semiconductors. Additionally, he has more than 10 years of software engineering experience ranging from firmware to UX design. He has authored numerous articles and presented at various conferences on topics including autonomous vehicles, AI cybersecurity, and space applications. Ching holds engineering degrees in Optics and mechanical engineering as well as ISO26262 functional safety engineer certification from TUV SUD.

About the author: Ching Hu is a member of the technical staff at SiMa.ai covering robotics system architecture, functional safety, and system cybersecurity. His passion is all things robotics, as can be seen by his career trajectory where he worked on system design and architecture related to commercial avionics, aerospace & defense platforms, wireless communication, and semiconductors. Additionally, he has more than 10 years of software engineering experience ranging from firmware to UX design. He has authored numerous articles and presented at various conferences on topics including autonomous vehicles, AI cybersecurity, and space applications. Ching holds engineering degrees in Optics and mechanical engineering as well as ISO26262 functional safety engineer certification from TUV SUD.